In the world of enterprise AI, change is afoot. Organizations have long deployed artificial intelligence in the form of specialized, isolated models – a recommendation system here, a fraud detection engine there – each doing its own narrow job.

But a new paradigm is emerging. AI agents and agentic workflows are transforming these once-disconnected solutions into modular, orchestrated, goal-driven systems. This post explores how enterprise AI architectures are evolving from siloed models toward flexible AI agent frameworks, and what this means for forward-looking tech leaders.

Traditional Enterprise AI: Siloed Models and Static Workflows

Not long ago, a typical enterprise AI architecture consisted of scattered point solutions. Each AI model was like an island: a recommendation engine suggesting products on the e-commerce site, a fraud detection model screening transactions in finance, a predictive maintenance model monitoring equipment in operations. These models were often developed and deployed independently, embedded into specific applications or pipelines. They excelled at their individual tasks, but rarely “talked” to one another.

Such architectures are static and task-specific. If a business process needed multiple AI capabilities, the integration was usually hard-coded – for example, a workflow might first run a credit risk model, then separately call a marketing model. Coordination between models was minimal, typically done by deterministic business logic rather than by the AI itself. In short, enterprise AI lived in silos, with each model offering insights or predictions for a single use-case.

This traditional approach has its limits. Siloed models can’t easily tackle complex, multi-step goals that span across business domains. They also tend to duplicate efforts – every new use case may spawn yet another standalone AI system. As enterprises aim to become more AI-driven, the need for a more unified, adaptive AI architecture has become clear. Enter AI agents and agentic workflows.

From Isolated Models to Agentic Workflows: AI Agents Take Charge

The rise of AI agents marks a shift from static predictions to active decision-making and orchestration. An AI agent is more than just a model – it’s a system capable of perceiving information, making decisions, and taking actions to achieve a goal. Instead of only answering a narrow question (e.g., “Is this transaction fraudulent?”), an AI agent can pursue broader objectives (“Investigate and handle this transaction end-to-end”). This means moving beyond isolated models towards modular, goal-driven workflows where AI components collaborate.

What does this look like in practice? Imagine an AI agent assisting in customer service. In a single flow, it might analyze a customer’s query, retrieve relevant information, decide on the next steps, run a sentiment analysis model, query a product recommendation service, and then formulate a resolution. Previously, each of those steps would involve separate models and manual integration. AI agents orchestrate them seamlessly within one system.

Recent advances in large language models (LLMs) and planning algorithms have supercharged this agentic approach. Modern AI agents can chain together tasks and tools autonomously. They are designed to retrieve and analyze information, make decisions via structured workflows, automate multi-step processes, and even execute API calls or trigger external actions. In other words, the capabilities that used to reside in isolated boxes can now be invoked on-the-fly by a smart agent operating with a higher-level goal in mind.

This shift is akin to moving from a set of powerful solo performers to an orchestra led by an AI conductor. The agent is the conductor that knows when to cue each instrument (model) and how to harmonize their outputs. We see early examples of this in the wild: frameworks like AutoGPT and BabyAGI gained attention for demonstrating how an AI could iteratively plan and execute tasks using multiple tools (though these are experimental). Tech companies are exploring similar concepts under various names – for instance, Microsoft’s TaskMatrix.AI and Salesforce’s xLAM initiative each aim to enable multiple AI components working in concert. The trend is clear: AI solutions are becoming more integrated and agentic, focusing on end-to-end outcomes rather than single-step predictions.

Inside an AI Agent Architecture (No More “Hybrid” Silos)

What does an agent-driven architecture look like under the hood? It helps to contrast it with the old setup. Earlier, we might design a “hybrid” solution by bolting one AI system onto another (e.g., an NLP model feeding into an RPA script). Now, instead of ad-hoc hybrids, we intentionally design AI agents and agentic workflows as first-class architecture components.

A modern AI agent architecture typically includes several layers working together:

Cognitive Core (Brain)

Often powered by an advanced AI model (such as an LLM), this is the reasoning engine. It interprets goals, context, and inputs (like user queries or sensor data). The core generates plans or decisions, often in natural language or some planning dialect, which makes it adaptable and expressive.

Tool/Model Integration Layer (Skills)

Here we have the collection of specialized models, data sources, and services that the agent can utilize as tools. This might include legacy ML models (recommendation systems, fraud detectors, vision or OCR models), knowledge bases, databases, and even traditional software APIs. The agent taps these tools on demand. For example, if the agent needs to verify a user's identity, it can invoke a facial recognition API; if it needs to recommend next-best actions, it calls the recommendation engine. In essence, the agent treats existing systems as modular skills it can deploy.

Orchestration & Memory (Workflow Manager)

This component handles the sequencing of tasks and retains context. The agent breaks a high-level goal into sub-tasks, decides the order (a plan), and keeps track of intermediate results. It might use an internal memory or scratchpad to remember what’s been done so far or what information has been gathered. This is crucial for multi-step workflows so that the agent doesn’t lose track of the overall conversation or process state.

Interface & Interaction (Conversation Layer)

Many AI agents present themselves through conversational or API interfaces. In enterprise settings, this could be a chat interface where a user issues a request (“Generate a monthly sales report and email it to the team”), or it could be a backend API that receives tasks from other software. The interface translates user intents into the agent’s internal goals and conveys the agent’s results back to the user in an accessible format (natural language response, report, action confirmation, etc.). This layer also handles important enterprise requirements like authentication, access control, and hand-offs to humans when necessary.

Governance & Safety (Guardrails)

Enterprise AI agents must operate within strict boundaries. This part of the architecture enforces security policies, ethical guidelines, and regulatory compliance. For instance, an agent might be allowed to fetch customer data but not permitted to delete records unless certain conditions are met. It also involves monitoring the agent’s actions and decisions, with logging and oversight mechanisms so that human administrators can audit what the agent is doing. This ensures the system remains secure and trustworthy, crucial for CxOs who need to manage risk.

Within such an architecture, the term “hybrid agents” is outdated – we no longer see it as a clumsy fusion of AI parts. Instead, we have a cohesive AI agent framework where each module has a clear role. The agent’s workflow is “agentic” in the sense that it can dynamically decide which modules to invoke, when to loop back or iterate, and when to stop. It’s a structured yet flexible approach: structured, because the available tools and rules are defined; flexible, because the agent can sequence them in novel ways to meet a goal.

Crucially, this architecture is modular. Enterprises can upgrade or swap out components (e.g., a better fraud detection model) without redesigning the whole system – the agent will simply start using the new tool. It’s also scalable: multiple agents or micro-agents can be deployed for different tasks, all following a similar architecture and governed by the same safety layer. This modular, agent-oriented design is far more adaptable than the brittle, point-to-point integrations of the past.

AI Agents as Orchestrators: Integrating Legacy Models and Systems

One of the most powerful aspects of AI agents in an enterprise architecture is how they leverage existing investments. Those standalone models and systems from the “old” architecture aren’t discarded – rather, they become services in the agent’s toolkit. The AI agent essentially serves as an orchestrator of these capabilities, often achieving more than the sum of its parts.

For example, consider how a modern e-commerce company could upgrade its architecture. Traditionally, the company might use a standalone personalization model for product recommendations and separately use an inventory forecasting model. In an agent-driven architecture, a shopping assistant agent can call the personalization model as one step in helping a customer (“What suits this customer’s preferences?”) and then consult the inventory model as another step (“Is the item in stock or should we suggest alternatives?”). The agent can then combine those insights to decide the best answer or action. The former siloed models now operate as cooperative parts of a broader workflow, coordinated by the agent.

Research from Microsoft and others demonstrates the feasibility of this approach. In the HuggingGPT project, an LLM-based agent is used as a controller to manage and sequence many specialized AI models for different tasks. The process works as follows: the agent (GPTx in this case) plans the tasks needed to fulfill a request, selects the appropriate models (based on a description of their capabilities), executes each sub-task with those models, and then integrates the results into a final output. This is a blueprint for how an enterprise agent might dynamically tap dozens of internal APIs and models to accomplish a complex goal. Instead of a human orchestrating a workflow across departments and systems, the AI agent can do it in real-time.

Crucially, AI agents don’t replace the specialized models – they elevate them. Your fraud detection engine remains the authoritative tool for spotting fraud; your recommendation system is still the expert on user tastes. What changes is how they’re used. The agent layer can invoke these expert systems precisely when needed and combine their outputs with other reasoning. A useful analogy is thinking of each traditional model as a skilled team member, and the AI agent as a project manager who knows when to call on each member and how to compile their expertise into a solution.

This approach brings several benefits:

Holistic Problem Solving

The organization can tackle end-to-end problems (like customer onboarding, order fulfillment, claim processing) with AI handling many steps autonomously. The agent navigates across what used to be disparate systems to achieve the overarching goal.

Reusability

Existing models and APIs become reusable components for many different agent-led workflows. This maximizes ROI on those systems. A single sentiment analysis service might be used by a marketing agent, a customer support agent, and an HR feedback agent alike.

Faster Development

Rather than training a brand-new model for every new composite task, the agent just reuses building blocks. Development shifts from model-training to agent orchestration and prompt design, which can be faster and more iterative.

Adaptability

If one component improves (say, you deploy a more accurate recommendation model), every agent that uses that component instantly benefits. Similarly, if a component fails, the agent can be designed to handle it gracefully or use a fallback.

To achieve this integration, enterprises must invest in a robust API and data infrastructure. Each legacy model or tool needs a well-defined interface (often as a microservice or endpoint) so the agent can call it. Metadata about each tool’s capabilities is also important – an agent needs to know what each tool can do (and cannot do). This is analogous to a skills directory for the AI. In many cases, organizations are wrapping older systems with new API layers or adopting integration platforms to prepare for agent orchestration.

Security and permissions remain paramount. The agent should only call functions it’s authorized to. For instance, an agent might have read-access to customer data via an analytics API but not direct write-access to the production database unless explicitly allowed. Proper identity and access management must be in place so that the agent’s tool use is governed just like a human user’s actions would be.

When done right, the results can be impressive. Early adopters have reported significant improvements in automation of complex processes. And while the technology is still maturing, it’s clear that an AI agent working with a suite of specialist models can handle tasks that once required multiple disconnected systems and manual oversight. This is the essence of AI agents redefining the architecture – from isolated brains to a connected, thinking ecosystem.

Future Outlook: Orchestrated AI in the Enterprise (Conversational Spaces and Beyond)

The trajectory is set: enterprise AI architectures are becoming more fluid, more conversational, and more intelligent. What might a fully realized agent-driven architecture look like in the near future? Several key characteristics emerge:

Modular & Composable

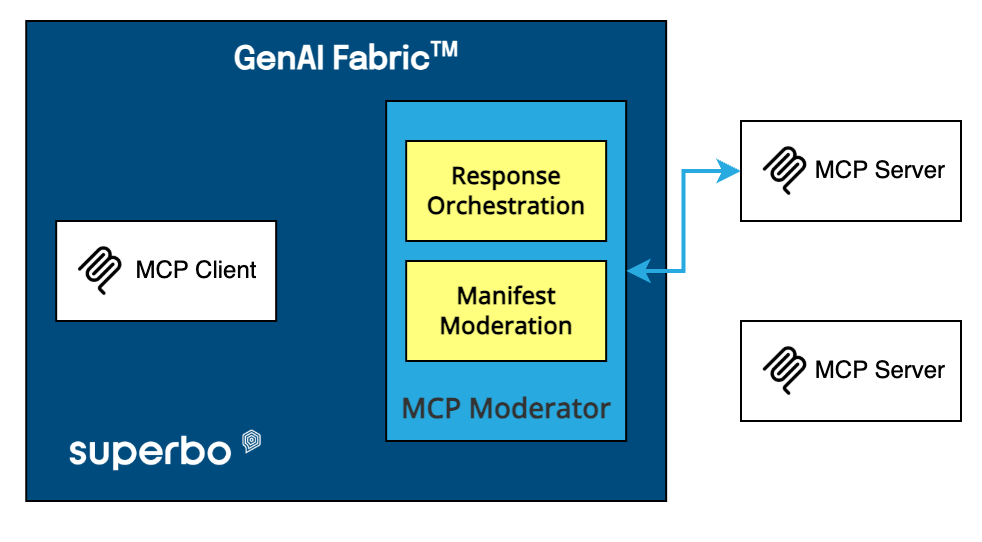

Future AI architecture will be built as a collection of interchangeable components. Think of it as an AI Fabric woven from many threads – data services, AI models, business logic, and interface channels – all interconnected. Enterprises will be able to plug new capabilities (a new “micro-model” or microservice) into this fabric, and the AI agents will immediately learn how to use it as another tool in their toolbox. This modularity ensures the system can evolve rapidly as new AI innovations arrive.

Conversational Spaces as the New UI

For many use cases, conversational interfaces (chatbots, voice assistants, etc.) will become the unified front-end to complex workflows. Instead of navigating multiple apps or forms, a user – be it a customer or an employee – will simply ask for what they need in a natural language conversation. Behind the scenes, an AI agent in a Conversational Space interprets that request and orchestrates the response by consulting various back-end systems. Superbo refers to this concept as Conversational Spaces™, powered by a GenAI Fabric: essentially, a secure environment where multiple micro-assistants (small specialized agents) collaborate within a conversation to get things done. For example, when a manager asks in a chat, “Prepare the sales report for last quarter and email it to the team,” one micro-assistant might pull the sales data, another formats the report, and another drafts the email – all coordinated within the chat session. The user experiences a smooth conversation, and the AI fabric handles the complexity.

Secure, Governed, and Trustworthy

As AI agents gain more autonomy, enterprises will double-down on AI governance. Expect to see architectures with built-in audit trails for agent decisions, sandboxes for testing agent behaviors, and policy engines that can constrain agent actions. Similar to how cloud architectures today include firewalls, monitoring, and compliance checks, tomorrow’s AI architecture will include guardrails to ensure agents operate safely within business and ethical guidelines. Security is non-negotiable – these agents will have access to sensitive systems, so their authentication and authorization schemes will be as robust as any human user’s, if not more so. The goal is to embrace agentic power without introducing chaos or risk.

Orchestration and Intelligence Layers

The concept of an AI orchestration layer will become a standard part of enterprise architecture diagrams. This layer (what we’ve been calling the agent layer) will sit alongside existing layers (data layer, application layer, etc.), mediating between user intents and core services. It will also host the intelligence that decides how to fulfill requests. Some organizations may deploy multiple orchestrator agents for different domains (e.g., one specialized in IT support, another for finance operations), all adhering to common standards. Think of it as an agent ecosystem within the enterprise – diverse agents specialized by domain, yet able to hand off tasks to each other when a request spans multiple areas.

Continuous Learning and Improvement

Unlike static systems, AI agents can be designed to learn from each interaction. The architecture will likely include feedback loops – successful and failed agent actions can feed into model fine-tuning, and user feedback can be incorporated to improve future performance. Over time, the agent gets better at achieving goals and the underlying models improve. This means the architecture isn’t just using AI – it’s also generating new AI insights as a byproduct of operation (a virtuous cycle of data).

In this envisioned future, an enterprise’s AI feels less like a patchwork of programs and more like an adaptive organism embedded in the company’s operations. Business leaders could ask strategic questions to an AI advisor agent and get synthesized answers drawing from all corners of the organization’s data and expertise. Routine processes might run almost entirely under AI supervision, with humans stepping in only for exceptions or creative input.

Notably, companies like Superbo are already exemplifying pieces of this future. They emphasize delivering real, working AI solutions via microassistants instead of chasing buzzwords. As one analysis noted, much of what some call “Large Action Models (LAMs)” – referring to autonomous action-taking AI – is effectively achieved today with well-structured agentic workflows. In fact, Superbo’s own GenAI Fabric approach uses multiple micro-agents to orchestrate complex workflows securely, integrating reasoning and execution. In practice, this means enterprises can get started now with agent-based architectures rather than waiting for some mythical future AI. “Our microassistants already power seamless execution without waiting for the next big acronym,” a Superbo post quips, underscoring that this is a practical evolution, not just hype.

Conclusion

AI agents are redefining what “AI architecture” means for enterprises. The old model of one-model-per-problem is giving way to an interconnected network of AI capabilities orchestrated by intelligent agents. For CxOs and technology leaders, this transition offers huge opportunities – from drastically improved automation and efficiency, to more natural AI-driven user experiences, to greater agility in deploying new AI innovations.

However, it also demands careful planning. Architecting for AI agents means investing in integration, governance, and new skills (like prompt engineering and conversation design). It requires thinking in terms of platforms and ecosystems, not one-off solutions. The payoff is an AI infrastructure that is far more aligned with how businesses actually operate: cross-functional, goal-oriented, and adaptable.

In summary, the enterprise AI architecture of tomorrow is modular, secure, and orchestrated by AI agents working in concert. These agents and micro-assistants will inhabit our workflow conversational spaces, making technology more accessible and tasks more automated than ever before. Forward-looking organizations are already laying this groundwork – turning yesterday’s isolated models into today’s cohesive, agent-powered AI fabric that will support the business of the future. The era of siloed AI is ending, and an agent-enabled future is beginning. Now is the time to embrace this shift, with pragmatism and clear vision, to ensure your enterprise stays ahead in the new AI-driven landscape.