Beyond Raw MCP: A Security-First Approach to AI Tool Integration

The Model Context Protocol (MCP), introduced by Anthropic in late 2024, is an open standard that allows AI applications to discover and invoke external tools exposed by an MCP server. Anthropic describes it as “a USB-C port for AI applications,” due to its ability to provide models with a predictable way to connect to various data sources and actions. Since its introduction, the MCP ecosystem has rapidly expanded, with early adopters like Block, Salesforce, and Replit implementing production-grade MCP endpoints. As of May 2025, public directories list over 5,000 community MCP servers, and major AI providers such as OpenAI and Google DeepMind officially adopted MCP in March and April 2025.

However, this swift adoption has brought to light significant challenges when organizations directly integrate raw MCP traffic into their business systems. Implementing MCP without adequate safeguards can lead to operational inefficiencies and severe security vulnerabilities. Direct integration, often called “Raw MCP” or “Naïve MCP”, introduces several critical operational and security concerns for enterprises.

Key Risks of Raw MCP Integration

While not exhaustive, the following table highlights the most common and impactful vulnerabilities observed in unmoderated MCP deployments:

The Path Forward

While MCP’s standardization offers tremendous value for AI-tool integration, enterprise deployment requires more than connecting clients to servers. Organizations need security-first implementation frameworks that preserve MCP’s interoperability benefits while addressing these fundamental vulnerabilities. This article explores how enterprises can implement MCP safely through layered security controls, prompt management, and governance frameworks that turn MCP from a potential liability into a competitive advantage.

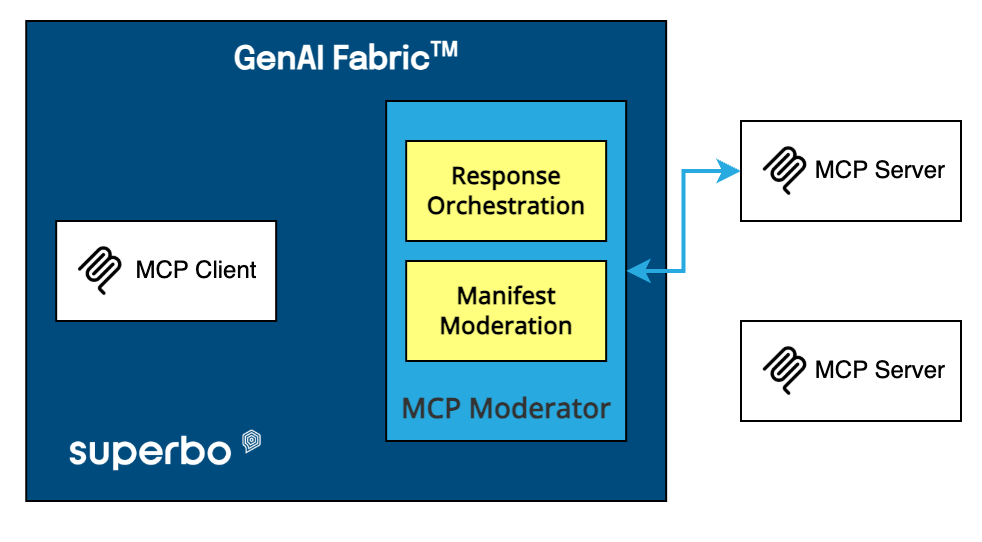

Introducing "Moderated MCP": Superbo's Security-First Tool Augmentation Layer

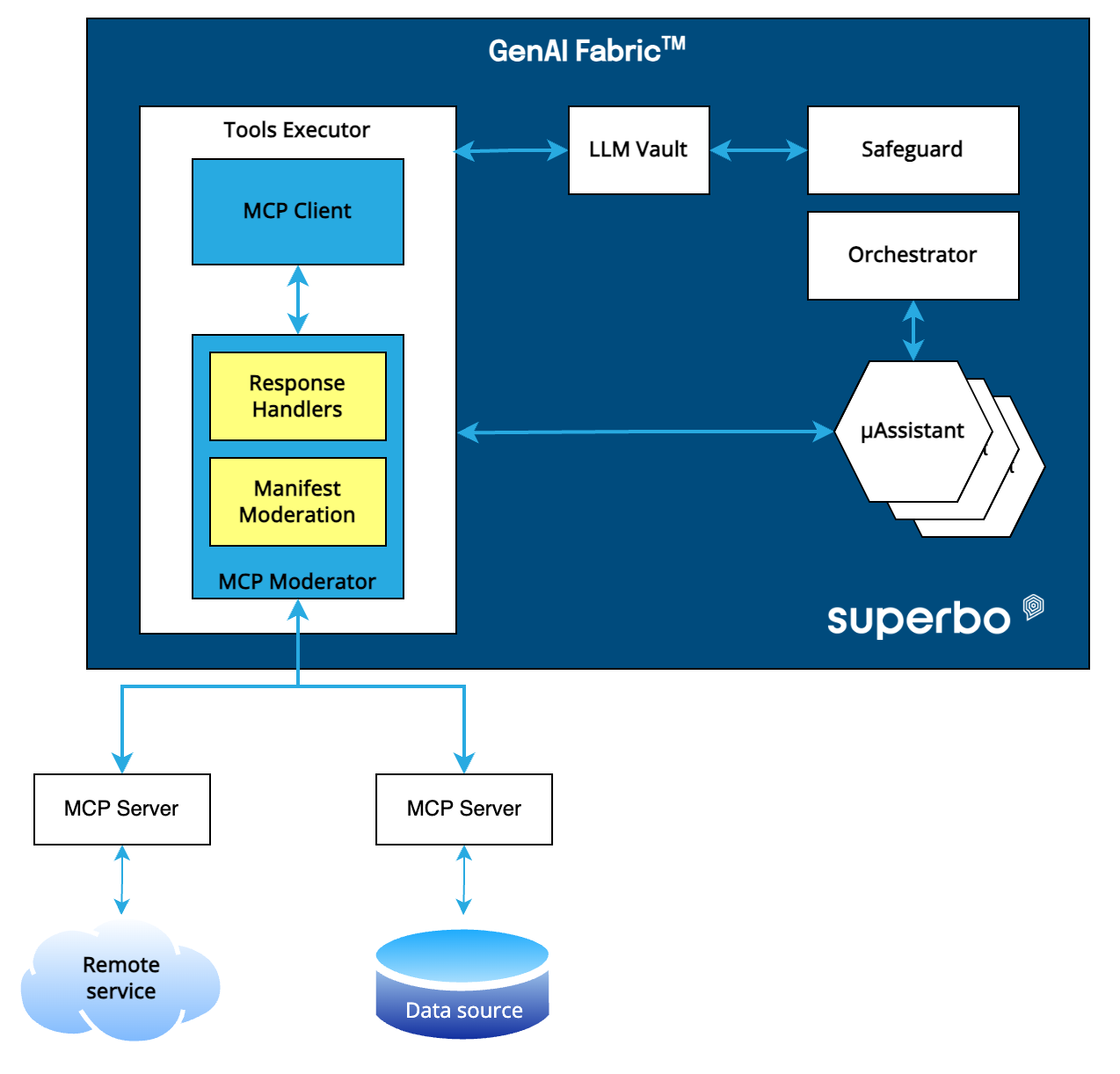

By July 2024, we presented Superbo GenAI Fabric™, a security-first agentic framework, which is currently deployed in production and serves over 5 million end-users. Our architecture focuses on the concept of micro-Assistants (agents encapsulators) and LLM Vault, a security layer that mitigates all the applicable OWASP LLM risks in agentic workflows. You can read more here:

https://superbo.ai/unleashing-superbo-genai-fabric/

and here:

https://superbo.ai/security-for-agentic-ai-solutions-new-and-emerging-challenges/.

Building on the principles of layered security and intelligent management, we now introduce Moderated MCP: an intermediate service layer within GenAI Fabric™ that governs multiple aspects of the MCP traffic, designed specifically to address the critical challenges of enterprise MCP adoption. By sitting between the MCP client and server, Moderated MCP transforms raw MCP traffic into a secure, efficient, and governable workflow.

Here we will examine how Moderated MCP addresses four of the challenges critical to production deployments that are often overlooked:

Moderated MCP addresses critical challenges through capabilities like the two we will look below: Tool Manifest Moderation and Tool Response Orchestration.

The following figure shows the security stack of Opero:

Figure 1. Superbo GenAI Fabric™ security stack and the MCP Moderator

Tool Manifest Moderation

Moderated MCP dynamically manages tool manifests to combat context bloat, prompt misalignment/style drift, and prompt injection (OWASP LLM-01). It doesn’t merely select active tools per prompt but also validates, sanitizes, translates, and optimizes their names, descriptions, and JSON schemas. This process ensures token efficiency, aligns tool metadata with the LLM’s capabilities, conversational style and local prompt strategy, and eliminates embedded malicious instructions that could exploit tool description poisoning. By controlling and rewriting tool metadata, Moderated MCP establishes a secure foundation, preventing extraneous information from compromising prompt accuracy, increasing costs, or enabling security breaches.

Figure 2. Moderated MCP: Tool Manifest Moderation

If models are truly open-source (weights + architecture + terms allow free use/modification), obligations are lighter — but copyright compliance and transparency are still mandatory.

Exception: If a model poses systemic risk, even open-source providers face full obligations.

Tool Response Orchestration

Naïve MCP often directly injects raw tool responses into the LLM’s context, forcing the model to interpret and format complex or verbose data, which can lead to prompt misalignment and suboptimal LLM performance. Moderated MCP, however, allows developers to define custom response handlers. These handlers pre-process and tailor tool outputs based on business logic, ensuring that the information presented to the LLM is precisely what’s needed for accurate reasoning and a consistent user experience, rather than relying solely on the LLM’s interpretation capabilities to filter and rephrase.

Figure 3. Moderated MCP: Tool Response Orchestration. Response Handlers craft raw responses to business-aware-responses that fits the local prompt strategy.

The Challenge of Scale and Complexity in Enterprise Deployments

The benefits of Moderated MCP become even more apparent when considering the transition from controlled environments to complex, real-world deployments. While using playgrounds with a limited number of 2-3 MCP tools might seem efficient for PoC and pilots, especially with extra-large language models, this simplicity rarely translates to production environments. In such scenarios, organizations integrate dozens of MCP tools, whose manifests can significantly overlap. This complexity is further compounded when a smaller Large Language Model (LLM) must be used due to cost, latency, fine-tuning or deployment constraints.

In such scenarios, a prompt engineer faces the challenging task of mastering not only the local prompt template but also all verbatim injections from the numerous tool manifests. Without Moderated MCP’s ability to dynamically manage, sanitize, and optimize these manifests, the prompt engineer would need an encyclopedic knowledge of how each tool’s description and schema interacts with the LLM’s capabilities and the overall prompt strategy. This manual effort is prone to errors, significantly increases the complexity of prompt engineering, and can easily lead to “prompt misalignment” or “context bloat” as described earlier. Moderated MCP’s automated and intelligent handling of tool manifests drastically reduces this burden, ensuring that the LLM receives a concise, relevant, and secure context, regardless of the number or complexity of integrated tools, and regardless of the LLM’s size. This allows for greater scalability, efficiency, and a more robust security posture in enterprise AI deployments.

Conclusion

The MCP standard unlocks unprecedented tool-powered AI workflows, but naïve, unmoderated deployments introduce risks such as context bloat, prompt drift, and OWASP-LLM threats. Superbo’s Moderated MCP layer, featuring Manifest Moderation and Response Orchestration, compresses prompts, preserves style fidelity, and neutralises malicious tool payloads. This approach is a cornerstone of Superbo’s GenAI Fabric™ security, empowering enterprises to safely and effectively integrate AI-tools within their existing systems.