Introduction: The Fascination with LLMs and Reasoning Abilities

Large Language Models (LLMs) like GPT-3.5 and GPT-4 have captivated the world with their ability to answer questions, write essays, and even code. But do these impressive abilities translate into genuine reasoning and planning skills? This question is crucial because it helps us understand the limitations and potential of LLMs. If these models can reason, they could revolutionize fields like healthcare, education, and business. However, to truly evaluate this, we need to dissect what “reasoning” means and whether LLMs possess it in the way we typically understand.

What is Reasoning and Planning in the Context of AI?

The concepts of System 1 and System 2 reasoning were popularized by psychologist Daniel Kahneman in his book Thinking, Fast and Slow.

System 1 refers to intuitive, fast, and automatic thinking—often driven by pattern recognition and gut reactions. It’s the type of thinking we use to make quick decisions without much deliberation.

On the other hand, System 2 is slow, analytical, and deliberate. It involves logical reasoning, careful evaluation of information, and weighing different outcomes before making a decision.

Reasoning and planning in AI similarly involve using logical steps, evaluating multiple pathways, and predicting outcomes to solve problems. In humans, effective reasoning often involves System 2 thinking—slow, deliberate, and analytical.

LLMs, on the other hand, function more like a pseudo System 1, generating responses based on patterns and correlations they have observed in vast training data.

The difference is crucial: LLMs are not explicitly designed to evaluate logical chains of thought, making their “reasoning” more of an approximate process rather than a truly principled one.

Techniques like Chain of Thought (CoT) prompting have been introduced to help LLMs express their reasoning steps explicitly. CoT involves breaking down complex problems into simpler, sequential steps, which helps LLMs perform better on certain reasoning tasks by making their thought process more structured.

However, while CoT can improve the model’s performance, it often still lacks true generalizability and adaptability, which limits its effectiveness in novel situations.

Capabilities of LLMs: Achievements and the Limitations

There is no denying the power of LLMs. They have passed professional exams, generated working code, and solved natural language puzzles, which suggests an extraordinary ability to learn from data. For instance, benchmarks like GSM8K, which involve mathematical problems, highlight how LLMs have made strides in replicating logical operations. However, these successes do not always translate to genuine reasoning. When conditions are slightly altered—such as changing the context of a math problem—performance often drops drastically, exposing the limitations of LLMs in adapting to new, unfamiliar problems.

The Illusion of Reasoning: Reciting vs Genuine Understanding

One of the biggest misunderstandings about LLMs is that they perform deep reasoning, while in fact they rely heavily on pattern matching.

Studies have shown that these models often give correct answers to questions they have seen in training but struggle when faced with new conditions or questions that involve nuanced logical steps.

For example, LLMs often fail at counterfactual reasoning, where they need to understand a hypothetical scenario that deviates from learned conditions.

This inability indicates that they lack a fundamental aspect of logical thinking—adaptability to new rules or contexts.

Chain of Thought prompting attempts to mitigate this limitation by guiding LLMs through a series of structured reasoning steps, but it is still limited in its ability to address fundamentally new or complex scenarios without prior examples.

The Medicine Context: What’s Unique About Medical Reasoning?

Medical reasoning, for instance, requires understanding complex interactions between patient data, medical histories, and treatment guidelines. It involves not only factual recall but also synthesizing different types of knowledge—codified knowledge from textbooks, experiential knowledge from patient interactions, and vertical reasoning across multiple interdependent medical concepts. Medical professionals often use analogical reasoning to draw connections between similar symptoms or cases.

LLMs struggle here because they cannot inherently integrate these layers without specific external guidance, often leading to incomplete or misleading conclusions.

Why LLMs Alone are Insufficient for Complex Reasoning in Medicine

The challenges in medical reasoning illustrate the broader shortcomings of LLMs in reasoning. LLMs often fail to integrate structured, codified information with the non-structured, experience-based knowledge needed for accurate medical recommendations. Their answers may sound convincing but frequently lack depth, context, and specificity, which are critical in fields like medicine. For instance, the KGAREVION knowledge graph agent demonstrates how leveraging an external grounded knowledge base can improve accuracy by filtering out incorrect or irrelevant information, which LLMs alone struggle to do.

The Role of Additional Tools: What Else is Needed for Proper Reasoning, Planning, and Reflection?

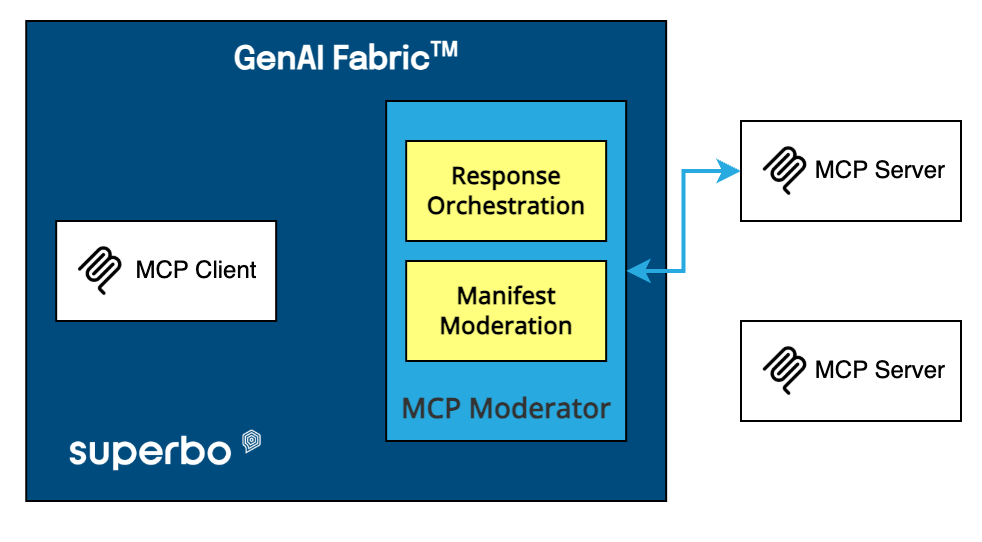

To move closer to true reasoning, LLMs need the support of additional tools. Knowledge Graph Agents help validate and ground the LLMs’ responses, providing a level of fact-checking that LLMs cannot inherently perform. Moreover, dynamic retrieval systems such as Retrieval-Augmented Generation (RAG) can improve response accuracy by pulling in verified data from external sources, but they still need post-retrieval checks to ensure factual correctness.

Another promising approach is agentic workflows, where an LLM works iteratively, generating drafts, evaluating its own outputs, and making improvements. This mirrors how humans reflect on and refine their ideas, allowing LLMs to simulate a form of deliberative reasoning.

Chain of Thought prompting also serves as a valuable tool in these workflows, as it allows LLMs to break down reasoning tasks into manageable steps, making complex planning more feasible. However, CoT is not an inherent capability of LLMs but rather a prompting strategy that guides the model to simulate reasoning processes more effectively. Even with CoT, these agentic systems require careful orchestration, often relying on multiple specialized sub-agents, each handling a distinct task to achieve a more reliable outcome

Reflection Mechanisms and Iterative Planning in Agentic Systems

Agentic workflows hold promise for elevating LLMs closer to true reasoning by incorporating self-critique and iteration. For instance, having an LLM generate an initial response, critique it, and refine it can enhance accuracy. This iterative approach mimics a human’s reflective process, yet it is constrained by the underlying model’s ability to truly understand and synthesize diverse pieces of information. Techniques like Chain of Thought prompting can aid in structuring these iterative reasoning processes, by ensuring each step is explicitly considered and articulated. However, without external checks, these iterative processes can end up amplifying mistakes rather than correcting them

Conclusion: A Path Forward for Reasoning Systems

LLMs have made significant strides in mimicking certain aspects of reasoning and planning, but they still lack the depth needed for complex, adaptive problem-solving. For tasks like medical reasoning, which require an intricate understanding of interdependent factors, LLMs fall short without the help of structured external systems. The path forward lies in hybrid systems—LLMs paired with knowledge graphs, retrieval systems, and verification tools, all working together to achieve a more robust form of reasoning. This approach, leveraging the strengths of different technologies, could unlock the full potential of AI in genuinely intelligent, context-aware problem-solving.