Recently, “AI agents” and “agentic workflows” have become buzzwords in the tech industry, generating much excitement and curiosity. The purpose of this article is to clarify what AI agents are, explore their strengths, weaknesses, and potential dangers, and provide insights into the future of AI agentic workflows.

What Are AI Agents and Agentic Workflows?

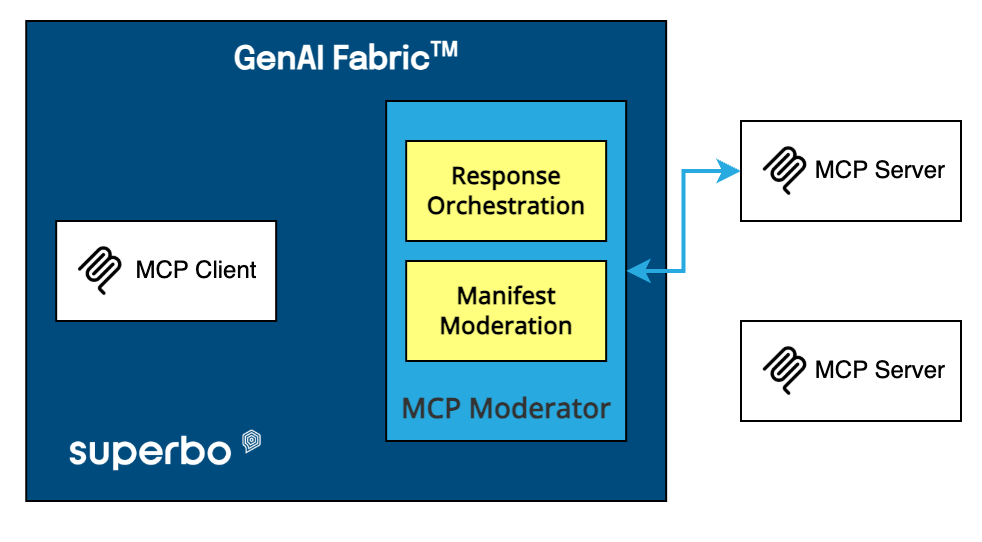

AI agents are advanced components that automate decision-making and reasoning by accepting user input and breaking it into smaller, manageable tasks. They plan the sequence of actions needed to solve these tasks and use “tools” to perform calculations, fetch data, or synthesize results. AI agents have memory to store task outcomes and may use reflection to evaluate their results’ accuracy. AI tools are the components that support agents in performing specific actions, such as data retrieval or complex calculations. In contrast, agentic workflows refer to processes where AI agents iteratively interact with their environment or other agents to achieve a goal. Unlike zero-shot prompting, which generates output from a single input, agentic workflows involve multiple iterative steps to refine outcomes. Large language models (LLMs) play a crucial role in AI agents and agentic workflows. They serve as the reasoning engine, enabling agents to interpret and process natural language inputs. LLMs can generate text, answer questions, and provide context-aware responses, making them integral to tasks like dialogue management, content creation, and decision-making processes. By leveraging LLMs, AI agents can handle complex interactions and adapt to a wide range of scenarios, enhancing the overall capabilities of agentic workflows.Risks and Limitations to deploy to Production

- Reliability: AI agents often struggle with reliability, achieving success rates of around 60-70%, which is far below the desired multiple 9s required for production environments. Frameworks like CrewAI, Autogen, and LangChain have been known to suffer from reliability issues, making them unsuitable for critical applications where consistent performance is essential.

- Complexity and Overhead: Many agent frameworks, are too complex for simple tasks, creating unnecessary overhead and making it difficult to understand what happens behind the scenes. These frameworks often require significant customization to fit specific use cases, which can be time-consuming and resource-intensive.

- Infinite Loops: Agents can get caught in loops, repeating tasks endlessly if they can’t determine the next step, which leads to wasted resources and time. This is a common issue in frameworks like CrewAI, where agents may continually loop through tasks without producing meaningful results unless explicitly managed.

- Tool Failures: The tools used by agents may fail or become obsolete, requiring constant updates and customization. In some frameworks, many tools were designed for basic applications and may not support advanced agentic workflows, necessitating the development of custom tools to ensure functionality.

- Lack of “Explainability” – Difficulty to debug: AI agents often lack transparency, making it hard for users to understand why they made specific decisions. This lack of clarity can hinder trust and adoption. While frameworks like Autogen provide some tools for tracing decision paths, they often fall short of offering comprehensive insights into agent behavior, making debugging and auditing difficult.