As generative AI transitions from lab experiments to production systems—driven by rapidly advancing model capabilities, greater API accessibility, and the emergence of compelling business use cases—enterprises are confronting new challenges in scalability, governance, and delivery that demand robust operationalization. Enter GenAI Ops — the discipline of managing the lifecycle, deployment, and operations of generative AI applications. This article explores what GenAI Ops is, how it differs from traditional MLOps, how organizations can assess their maturity, and what best practices and tools can help them scale responsibly and effectively.

1. What is GenAI Ops — and How Does it Differ from MLOps?

GenAI Ops refers to the processes and practices for deploying and managing generative AI applications in production.

Unlike MLOps, which is built around training, versioning, and serving predictive models, GenAI Ops focuses on orchestrating large language models (LLMs), managing prompt flows, grounding outputs in enterprise data (RAG), orchestrating agents, and applying safety and compliance constraints (e.g., mitigating bias, preventing harmful content generation, and ensuring data privacy).

While MLOps centers on structured training data and CI/CD pipelines, GenAI Ops demands a new architecture — one that is dynamic, context-aware, and tightly integrated with both unstructured data and external systems. It shifts the focus from model-centric pipelines to prompt and action-centric orchestration. For example, an MLOps pipeline might optimize a fraud detection model, while a GenAI Ops framework powers an agent that advises users, queries databases, and escalates high-risk cases autonomously.

There are also important design trade-offs: fine-tuning a model gives tighter control but increases costs and complexity, while prompt engineering is faster and more flexible but can be less consistent across contexts.

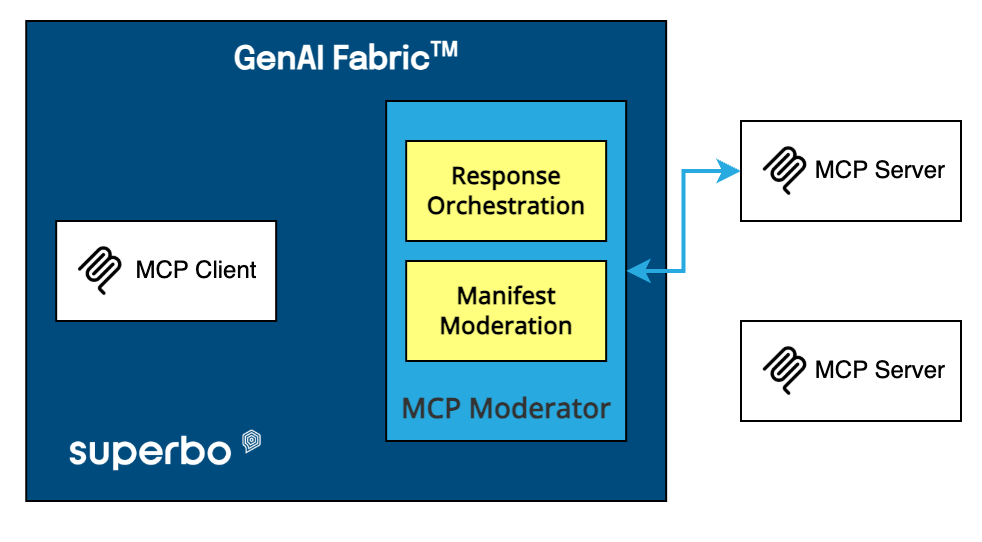

Platforms designed for this new reality, such as Superbo’s GenAI Fabric, aim to provide modular and agentic frameworks to manage workflows that go beyond LLM outputs—integrating actions, reasoning, security, and performance.

2. Measuring Your Maturity in GenAI Ops

Just as enterprises have assessed MLOps maturity, GenAI Ops requires a staged view of readiness. At the earliest stage, teams might rely on ad-hoc prompt experimentation and LLM API calls with no observability or repeatability. From there, they evolve toward building reusable prompt flows, adding evaluation metrics, and experimenting with Retrieval-Augmented Generation (RAG).

As maturity increases, organizations integrate observability, vector databases, and performance monitoring.

At the highest level, they run modular agentic workflows that combine multiple LLMs, custom tools, secure pipelines, and compliance enforcement.

Microsoft and Google offer structured maturity models for GenAI workflows, such as the Microsoft GenAI Maturity Model and Google Cloud’s Generative AI Operations Guide.

3. Building the Right Team for GenAI Ops

To support reliable, scalable generative AI in production, enterprises need a dedicated operational team with specialized roles. These roles focus on maintaining system uptime, managing model and prompt changes, securing sensitive data, and ensuring that all components—from grounding pipelines to agentic logic—perform as expected.

Prompt engineers play a central role in designing, testing, and versioning prompts, ensuring that each interaction with an LLM is well-crafted and contextually accurate. They collaborate with backend and platform engineers, who are responsible for integrating these prompts into application workflows and managing the infrastructure behind APIs and agent orchestration.

RAG architects and data engineers design and maintain the retrieval pipelines, ensuring that generative responses are grounded in up-to-date, relevant enterprise knowledge. These roles are crucial for indexing, chunking, and managing vector search systems.

LLMOps and ML engineers monitor model performance in production, manage fine-tuning workflows, and maintain model registries. They also help implement runtime controls such as temperature settings, output limits, and safety guardrails.

DevOps and SecOps specialists are essential for managing deployment pipelines, enforcing security policies, handling authentication and rate limiting, and ensuring compliance with data privacy standards.

Evaluation and observability engineers focus on metrics like hallucination rate, user satisfaction, token usage, latency, and response accuracy. They build dashboards and alerts to catch regressions early.

These roles collectively form the operational layer of GenAI Ops—the team that ensures GenAI systems function reliably in real-world environments. Solutions offering microassistant-like architectures, exemplified by platforms like Superbo’s GenAI Fabric, can support this model by allowing each component—retrieval, reasoning, planning, execution, and monitoring—to be managed independently while remaining part of a unified, agentic system.

4. Tools That Enable GenAI Ops

Operationalizing GenAI requires a robust toolkit. At the orchestration layer, frameworks like LangChain, Prompt Flow, and Superbo’s Fabric allow teams to build workflows that chain prompts, actions, and memory together. Vector databases like Pinecone, Weaviate, or Azure AI Search store knowledge embeddings and enable semantic retrieval.

Monitoring and observability are critical. Tools such as GenAI Eval, Weights & Biases, and EvidentlyAI help track model performance, prompt drift, and user satisfaction. On the infrastructure side, platforms like Vertex AI, Azure ML, and AWS Bedrock provide secure deployment, access control, and managed services. For more advanced implementations, agentic orchestration frameworks like LangGraph and CrewAI offer alternatives to solutions like Superbo’s Fabric.

To control costs, organizations can adopt strategies like caching frequent responses, using smaller fine-tuned models for low-priority queries, or routing only high-risk tasks to high-performance models such as GPT-4.

5. Best Practices for Scaling GenAI Ops

A few core principles underpin successful GenAI Ops practices. First, version everything—not just code and models, but prompts, grounding data, and agent workflows. Establish robust evaluation pipelines, blending automated metrics with Human-in-the-Loop (HITL) reviews, which are crucial for validation, handling ambiguity, and continuous improvement. Techniques like confidence scoring or self-consistency checks can help mitigate hallucinations.

Security and compliance must be embedded throughout. This includes input sanitization, LLM-as-a-judge frameworks for filtering, and building jailbreak-resistant prompts. For Retrieval-Augmented Generation pipelines, applying PII masking, GDPR-compliant retention policies, and access control ensures data privacy.

Architectural choices should also account for performance: streaming RAG can lower latency in real-time systems, while batch processing may be more suitable for large-scale summarization.

Agentic workflows are a cornerstone of mature GenAI Ops. For example, a customer support agent might chain: (1) RAG for knowledge base retrieval → (2) LLM for drafting a reply → (3) rule-based compliance check → (4) human escalation if confidence score falls below 90%.

Lastly, governance matters. Leading organizations are setting up GenAI oversight councils to review usage, assess ethical risks, and enforce policy. In a world where GenAI systems make increasingly autonomous decisions, this layer of human accountability is vital.

Conclusion

GenAI Ops is not a buzzword—it’s the operational backbone that enables enterprise-ready, scalable, and secure generative AI. As companies move beyond experimentation, they must adopt structured practices to manage LLM performance, safety, reliability, and integration.

Platforms built on concepts like microassistants and modular workflows, exemplified by solutions such as Superbo’s GenAI Fabric, are emerging to meet these challenges head-on, empowering enterprises to transition from assisted automation to fully agentic systems.

Special thanks to the bright minds of Dr. Sokratis Kartakis, and the experts from Microsoft Azure AI, The Centre for GenAIOps, and Google Cloud for their published and publicly available work.

Recommended Reading:

Microsoft GenAI Maturity Model

https://learn.microsoft.com/en-us/azure/machine-learning/concept-genaiops-maturity

Google Cloud’s Generative AI Operations Guide

https://cloud.google.com/blog/products/ai-machine-learning/introducing-generative-ai-ops

Dr. Sokratis Kartakis: GenAI in Production: MLOps or GenAIOps?

https://medium.com/google-cloud/genai-in-production-mlops-or-genaiops-25691c9becd0

Dr. Sokratis Kartakis: GenAIOps: Operationalize Generative AI – A Practical Guide

https://medium.com/google-cloud/genaiops-operationalize-generative-ai-a-practical-

guide-d5bedaa59d78