Security for Agentic AI Solutions: New and Emerging Challenges

Introduction

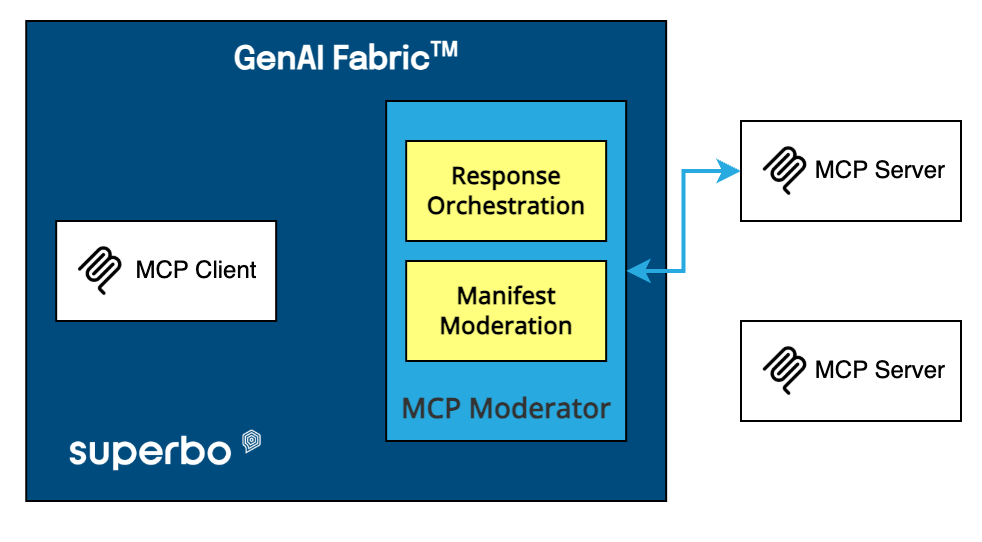

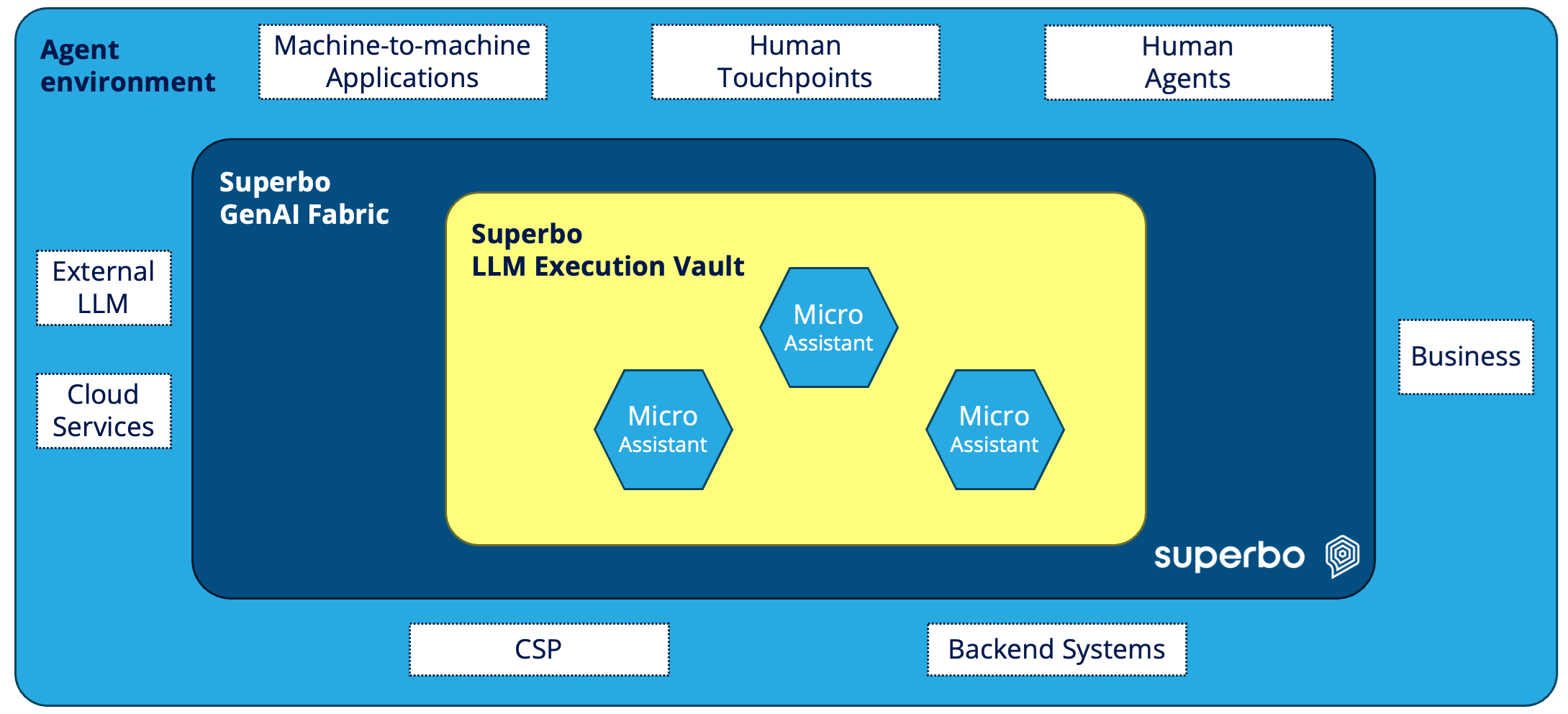

As agentic AI solutions gain traction across industries, they face an evolving set of security challenges that threaten their safe and reliable operation. These challenges include mitigating hallucinations—where AI generates misleading or inaccurate outputs—preventing malicious prompt injections and jailbreaking attempts, safeguarding user privacy, and implementing robust agentic access controls to prevent unauthorized actions. The Superbo GenAI Fabric, a cutting-edge agentic framework, addresses these concerns and secures LLM workflows execution through its standout LLM Execution Vault, which provides advanced security mechanisms. This article discusses the major security considerations for agentic solutions and explains how frameworks like GenAI Fabric ensure resilience against emerging threats.

Key Security Challenges in Agentic AI Solutions

A new category of “security related” challenges and considerations has emerged, with the adoption of GenAI and AI Agents:

-

Hallucinations: “Hallucination” in the context of Large Language Models (LLMs) refers to instances when the AI generates responses or content that appear coherent and plausible but are factually incorrect, fabricated, or misaligned with the provided context or prompt. In Retrieval-Augmented Generation (RAG) applications, hallucinations occur when the AI generates false or misleading information that diverges from the retrieved knowledge base. These hallucinations can lead to inaccurate or harmful outcomes, such as providing incorrect answers, undermining user trust, or spreading misinformation. In the field of AI Agents, hallucinations can manifest as erroneous interpretations, flawed plans, or incorrect reasoning. These hallucinations can drive wrong or catastrophic decisions, especially when the agent operates autonomously in high-stakes environments, such as healthcare, finance, or critical infrastructure. Managing and minimizing hallucinations is essential to ensure safety, reliability, and ethical AI deployment.

-

Prompt Injections and Jailbreaking:In Large Language Models (LLMs) and AI Agents, the prompt is a critical input that guides the model’s behavior and output. Unlike internal components of a solution, such as pre-trained parameters or system-level safeguards, the prompt is externally influenced by the environment, including user input or interactions. This external nature makes prompts inherently dynamic and susceptible to manipulation, especially in open-ended or interactive systems where user inputs are integral to the workflow. Example: An attacker injecting commands like “Ignore the above instructions” could access restricted information or alter agent behavior.

-

Privacy Protection: AI agents often have access and process sensitive personal and organizational data. Without stringent privacy measures, this data could be exposed to unauthorized access or misuse.

-

Agentic Access Control: As agents interact autonomously with multiple systems, ensuring they only access authorized resources and actions becomes critical.

OWASP’s 10 Critical Risks for AI Agentic Solutions

The Open Web Application Security Project (OWASP) has identified ten critical vulnerabilities for Large Language Model (LLM) solutions. These guidelines form the foundation for creating secure agentic AI frameworks:

-

1.

Prompt Injection:

-

Risks of manipulated prompts leading to unauthorized actions.

-

Mitigation: Input sanitization and monitoring for anomalous patterns.

-

2.

Insecure Output Handling

-

Risks of AI outputs containing malicious code or sensitive data.

-

Mitigation: Output sanitization and context validation.

-

3.

Training Data Poisoning

-

Maliciously altered training data embedding vulnerabilities

-

Mitigation: Data provenance tracking and anomaly detection.

-

4.

Denial of Service (DoS)

-

Overloaded systems through resource-intensive queries.

-

Mitigation: Rate limiting and computational complexity caps.

-

5.

Supply Chain Vulnerabilities

-

Risks from compromised third-party plugins or dependencies.

-

Mitigation: SBOM (Software Bill of Materials) tracking and dependency vetting.

-

6.

Sensitive Information Disclosure

-

Risks of exposing private or sensitive data in outputs.

-

Mitigation: Anonymization and access-level validation.

-

7.

Insecure Plugin Design

-

Plugins with excessive privileges causing system vulnerabilities.

-

Mitigation: Granular permission enforcement and sandboxing.

-

8.

Excessive Agency

-

Agents performing unauthorized or overly autonomous actions.

-

Mitigation: Guardrails, human oversight, and output tagging.

-

9.

Overreliance on LLMs

-

Trusting unverified outputs, leading to potential misinformation.

-

Mitigation: External validation and hallucination mitigation systems.

-

10.

Model Theft

-

Risks of stolen model weights or architectures.

-

Mitigation: Encryption and watermarking for intellectual property protection.

Superbo’s GenAI Fabric and LLM ExecutionVault: Comprehensive Security

Many agentic frameworks excel in orchestrating workflows, but trust remains a critical barrier to adoption. This is due to the probabilistic nature of underlying algorithms, which can lead to unpredictable outcomes. Recognizing this challenge, Superbo has spent over two years focusing on the security of LLM applications, including agentic frameworks.

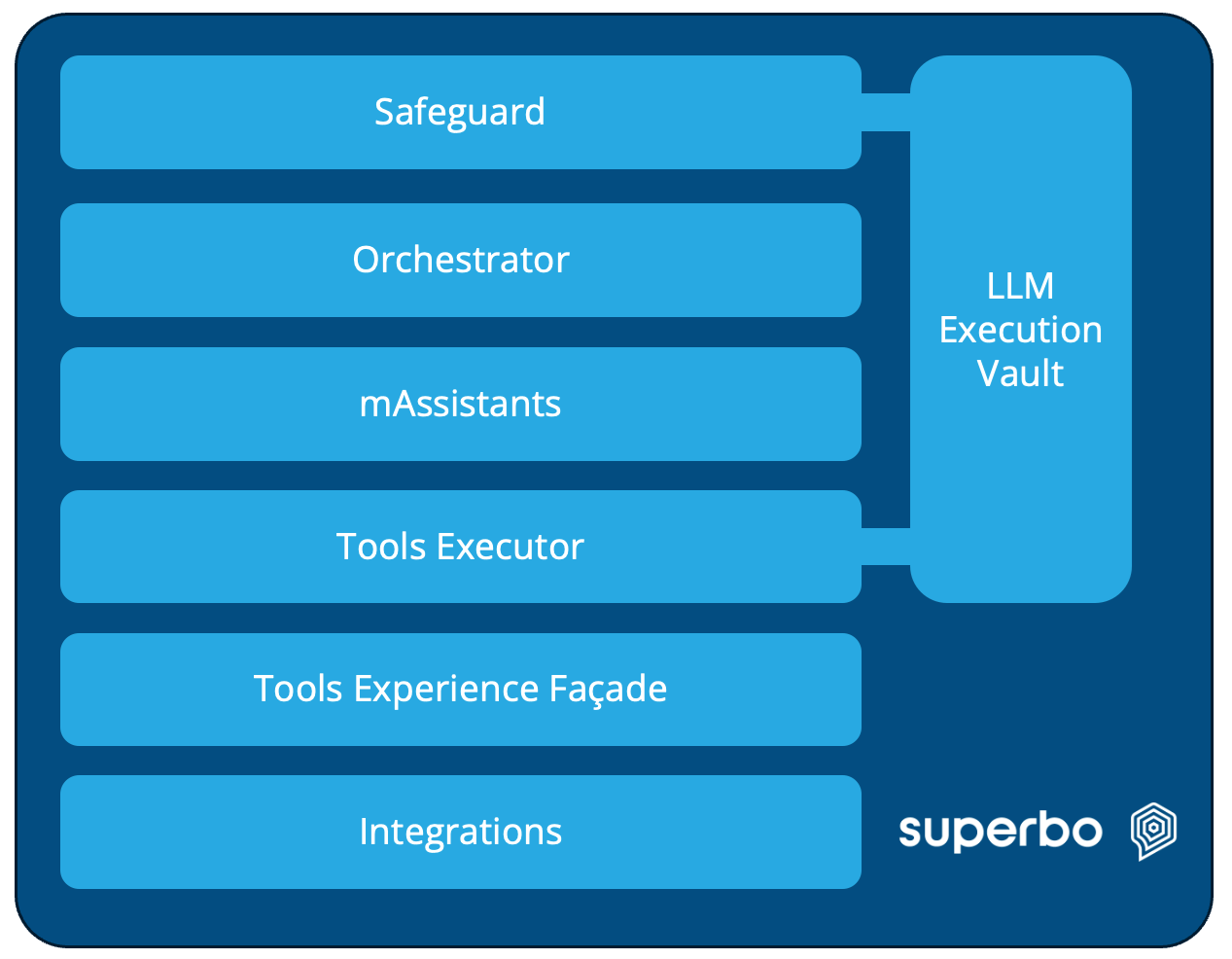

Superbo’s GenAI Fabric is designed with security at its core. At its heart lies the LLM Execution Vault, a specialized security module that addresses a wide range of vulnerabilities, including most of OWASP’s Top 10 risks. This vault not only safeguards agentic tools from unauthorized access but also supervises their secure execution—an often-overlooked yet vital aspect of agentic frameworks. By combining robust protections with innovative design, the GenAI Fabric delivers trust, reliability, and resilience in LLM-powered solutions.

The GenAI Fabric achieves this through the following key features:

-

1.

Data Anonymization

-

Removes personally identifiable information (PII) and sensitive data from prompts, ensuring compliance with GDPR and CCPA. This directly mitigates risks associated with Sensitive Information Disclosure and Insecure Output Handling.

-

2.

Jailbreaking Protection

-

Monitors prompts for injection patterns and blocks unauthorized modifications. Behavioral analysis identifies suspicious commands like “Override all rules” to prevent exploitation. This protects against Prompt Injection and Excessive Agency risks.

-

3.

Hallucination Mitigation

-

Fact-checking within RAG pipelines ensures outputs are grounded in trusted knowledge bases. Outputs are cross-validated to reduce misinformation, addressing Overreliance on LLMs.

-

Reasoning-state validation in agentic workflows reduces models’ fluctuations and guides execution into defined paths.

-

4.

Advanced Access Control

-

Combines OAuth 2.0, RBAC, and session-based authentication to enforce strict permissions. Agent activity is logged and audited, mitigating Insecure Plugin Design and Excessive Agency risks.

-

5.

Rate Limiting and Sandboxing

-

Implements API throttling and computational caps to prevent abuse. Isolates agent operations in secure sandboxes, addressing Denial of Service concerns.

Additionally, within the GenAI Fabric LLM Execution Vault, all agentic tools are executed in a protected mode with strict dependencies. For instance, a “service deactivation” tool cannot be executed unless:

- The user is authenticated

- The service has been properly identified

- The user explicitly consents to the action.

Conclusion

Security is paramount in the deployment of agentic AI solutions. By comprehensively addressing not only OWASP’s Top 10 vulnerabilities but also other ones experienced in production systems through the LLM Execution Vault, the Superbo GenAI Fabric sets a gold standard for secure, resilient AI frameworks. Organizations adopting such measures can confidently navigate the rapidly evolving AI landscape, ensuring trust, compliance, and operational efficiency while mitigating risks.