the AI Hub

Keep up to date with change

AI isn’t a sidebar. It’s the strategic equivalent of rolling out PCs or the internet across an entire organization. To be “AI First” means embedding AI into the very fabric of operations, decision-making, and culture, transforming AI from a tool into the organization’s central nervous system. In AI First enterprises like Duolingo and Shopify, AI guides everything, from workflow design to customer engagement, operating not as an add-on but as the driving force behind products, services, and strategy.

Europe’s landmark AI Act is here – and it’s reshaping how companies worldwide build and deploy AI systems. Much like GDPR shook up data privacy globally, the EU AI Act introduces sweeping rules for AI with extraterritorial reach. In other words, even if your enterprise is based outside Europe, the Act likely applies if you offer AI-driven products or services in the EU market or affect EU individuals. With provisions rolling out from August 2025 onward, tech leaders and CxOs must understand the Act’s requirements now to avoid hefty penalties and business disruptions.

AI agents are transforming from isolated bots into collaborative, autonomous digital workers. But what happens when these agents can discover, communicate, and work with each other across systems, companies, and domains? That’s the vision of the Internet of Agents (IoA) — a networked ecosystem where AI agents interact like web services, creating new possibilities for business collaboration and intelligence.

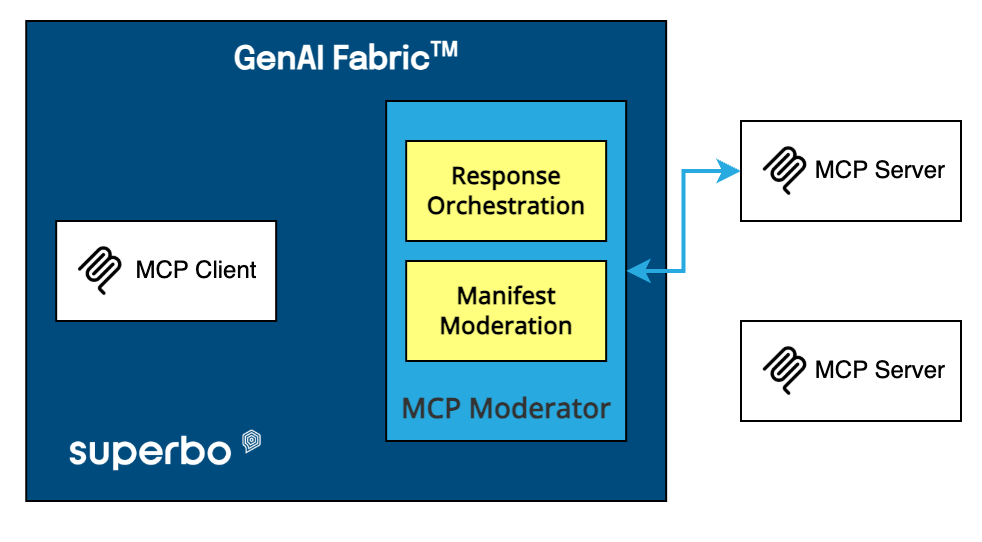

As generative AI transitions from lab experiments to production systems—driven by rapidly advancing model capabilities, greater API accessibility, and the emergence of compelling business use cases—enterprises are confronting new challenges in scalability, governance, and delivery that demand robust operationalization. Enter GenAI Ops — the discipline of managing the lifecycle, deployment, and operations of generative AI applications. This article explores what GenAI Ops is, how it differs from traditional MLOps, how organizations can assess their maturity, and what best practices and tools can help them scale responsibly and effectively.

As enterprise AI adoption soars, so do the energy and resource demands of AI systems. Every impressive demo of a large language model hides a hefty carbon footprint in the background. Training GPT-3 (175 billion parameters) gobbled up an estimated 1,287 MWh of electricity, emitting 502 metric tons of CO2 – about as much carbon as 110 gasoline cars running for a year. It’s not just electricity: running data centers for AI consumes vast amounts of water for cooling.

In the world of enterprise AI, change is afoot. Organizations have long deployed artificial intelligence in the form of specialized, isolated models – a recommendation system here, a fraud detection engine there – each doing its own narrow job.

But a new paradigm is emerging. AI agents and agentic workflows are transforming these once-disconnected solutions into modular, orchestrated, goal-driven systems. This post explores how enterprise AI architectures are evolving from siloed models toward flexible AI agent frameworks, and what this means for forward-looking tech leaders.

AI agents are often misunderstood, with many assuming they are just glorified AI assistants or chatbots. However, AI agents represent a paradigm shift in software development, moving from passive response systems to proactive, autonomous decision-makers.

To clarify this distinction, let’s explore AI agents through an example that everyone can grasp: the evolution of cars, to self-driving vehicles.